Actionable Defense-In-Depth Guide for Cloud Native Applications - Part 1

Setting up external protections around our Web Applications or API Services may not be enough if we do not utilize native layers in our Public Cloud Providers

In this article, I will share some important details based on the questions asked during my talks with colleagues. When we start discussing how to harden the security posture of our cloud-native application deployments, we will inevitably begin explaining cloud infrastructure components and basic networking elements to start protecting the environments from the lowest level.

All the developers I engage already have detailed knowledge about their service-oriented architecture elements and their interactions. Therefore they can introduce required cyber protection for their service meshes with many available approaches and methodologies.

As we all know, any cloud-native solution or application consists of application software and an underlying infrastructure. Each layer of our cloud-native applications and deployments requires different approaches for their security hardening.

If you only focus on securing your application software but neglect applying required security hardening steps for your infrastructure, you cannot claim a completely secured application or service. In the end, this negligence may cause a compromised environment or platform.

Foundation Layer of Protection

In this part of the article, I will focus mainly on infrastructure components and their most practical security-hardening approaches. All the key points that I will be explaining here also can be identified and listed as misconfiguration in the security monitoring tools and services if configured. Here you can find all these items regardless of whether a security monitoring is in place or not.

Since the early days of the computing journey, the infrastructure of the servers has always been secured with multiple networking components like routers, firewalls, managed switches, virtual local area networks (VLANs), and so on. All these components are used and configured in company data centers, co-location service providers, and recently in the public cloud providers.

In the beginning, all these components were physical electronic devices. They all contained proprietary software from their vendors and required special knowledge to set it up and configure it. For classic data center companies and operations having employees with this special knowledge and experience was a bare minimum requirement.

In today’s cloud-native world, the physical components already exist on public cloud providers. However, constantly changing consumer needs and the high velocity of development practices make handling these configuration changes impossible for these public cloud providers.

The virtualization of all required infrastructure resources enabled all cloud providers to provide configuration capability and flexibility to their customers. Any networking resource you configure today is a special software component for networking services of the virtualization foundation.

This flexibility comes with a cost. Cloud providers introduced a shared responsibility model concerning the security of the cloud accounts and the deployed applications. We can detail this shared responsibility model and what that means in a separate article for all the deployment models.

Let’s dive into the details based on mostly used public cloud providers. Our first stop is Amazon Web Services (AWS).

Networking Layers on AWS

On AWS, each resource is bound to a Region. Within every Region, you can configure one or more Virtual Private Clouds (VPCs). You can think of each VPC as an isolated and separate cloud definition. This way you can deploy many different applications and services in a Region using multiple VPC configurations without making an impact on each other.

The diagram above represents a simplified view of networking components to achieve layered security to achieve a defense-in-depth setup.

The traffic from the internet arrives at the Internet Gateway and Router. You can consider this like your broadband modem at home or at the office. After the Router, the traffic passes through the Network Access Control List (NACL). NACL definitions contain Allow and Deny rules based on the IP addresses or IP address ranges.

For example, if you do not want any traffic to reach your VPC and resources, you can create a set of Deny rules for incoming (ingress) traffic. This way any request or communication attempt from the anonymized Tor network is automatically rejected at the entrance of your VPC Network. Or if you want to allow the traffic only from known address ranges you can create Allow rules for these IP Address ranges and block the rest of the Internet. The same scenario applies to outgoing (egress) traffic. You can configure how your systems will communicate using allow or deny rule combinations for outgoing (egress) traffic as well.

Network Access Control Lists can be used to protect your while VPC. If you want to shape the traffic inside your VPC and between your workloads, you can use Routing Tables, Subnets, and Security Groups.

You can create public and private subnet configurations by making definitions on routing tables and associating desired subnets with different routing tables. This way, you can prevent any workload or application and service component from communicating with the public internet. Also, this blocks any external traffic from reaching these components as well.

One step further, you can use security group definitions that are linked directly to your workload instances on protocol and port level to introduce more strict control around which service can communicate with which service or managed service from the AWS like Relational Database Services (RDS).

Key Aspects of GCP

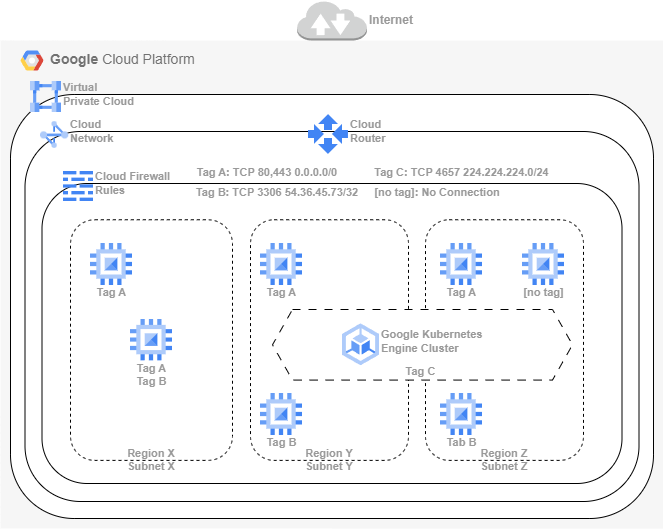

As I have highlighted in the first part of the article each public cloud provider has different architectural decisions.

When we dive into Google Cloud Platform (GCP), we see a significant difference from AWS. Virtual Private Cloud (VPC) setup on GCP is global. The subnets are regional resources opposite to AWS.

This approach brings more flexibility and configuration options. If any of your resources need to communicate with another resource in a different region, you do not need to set up a VPC Peering as long as they are in the same VPC. Default Routing Table definitions make this possible.

Another advantage of this Global VPC capability is the ease of multi-region load-balancing among deployments spread to multi-region.

In the Google Cloud Platform, there is no Network Access Control List or Security Groups. These two separate components have been combined in Cloud Firewall Rules definitions.

You can define fine-grained Firewall Rules as part of the VPC networking in GCP. To make the assignment easy, you can define a separate Tag for each firewall rule. If you assign these tags to the workload instances, the firewall rules apply automatically.

If you delete or disable all the firewall rules without tags, this prevents any non-controlled traffic from reaching unexpected workloads.

As you can see in the diagram above, you can limit allowed IP Address or IP Address ranges on these Cloud Firewall Rules.

If you need different applications or service deployments with different networking definitions, you can create separate VPCs to achieve your desired architecture deployments.

Even though GCP implementation of layered security and defense-in-depth on networking seems and sounds simpler than AWS, it is really easy to forget to disable or delete non-tagged Firewall Rules. Or applying the wrong tags to the wrong workload resources.

How to prevent mistakes

Differences in the public cloud providers may be confusing. But you can make your selections based on your needs. Each architecture comes with its pros and cons. This is where proper assessments and evaluations bring the most value in the long run.

All the things you have read so far may sound quite complex. But if you define all these communication boundaries properly in the design phase of the application and implement all these rules and configurations using the Infrastructure as Code (IaC) approach, you will have error-free, properly hardened, and repeatable deployment for your solution.

You can go one step further by applying proper tests and policies to the IaC manifests to prevent any future mistakes structurally. These tests and policies can be applied as part of CI and CD pipelines to automate and introduce proper security guardrails most efficient way.

If any part of this article raises any questions, do not hesitate to send a message or make a comment that, I can explain any topic with further details.

You can leave a comment, ask your question, or send me a direct message to share your thoughts.